Introduction: The Importance of Layered Architecture in Data Engineering

- Brief overview of the challenges in managing large datasets.

- Introduction to Databricks as a unified analytics platform.

- Why the Bronze, Silver, and Gold architecture is essential for efficient and scalable data engineering.

What is Databricks and How Does It Fit into Modern Data Engineering?

- Brief introduction to Databricks.

- Explanation of Databricks’ role in modern data pipelines.

- Overview of its integration with big data technologies like Apache Spark and Delta Lake.

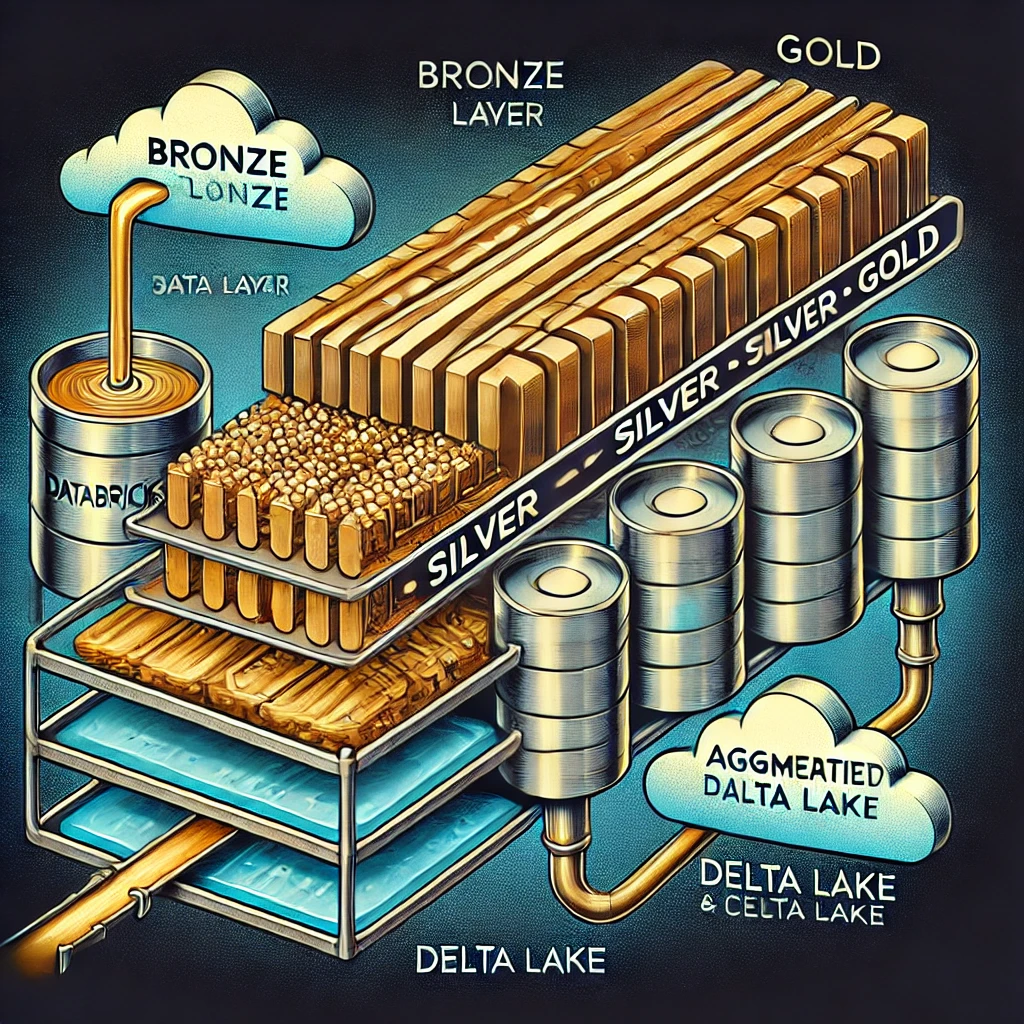

The Bronze, Silver, and Gold Architecture: An Overview

- Bronze Layer: Raw, unprocessed data.

- Silver Layer: Cleaned, transformed, and validated data.

- Gold Layer: Aggregated, analytics-ready data.

- Benefits of using this layered approach, such as simplifying transformations, improving performance, and making data accessible for various business needs.

Implementing the Bronze Layer in Databricks

- Objective: Ingest raw data from multiple sources (e.g., IoT devices, databases, API feeds) into Databricks.

- Key Technologies: Databricks, Delta Lake, Apache Spark.

- Steps to Implement:

- Set up an environment in Databricks.

- Ingest raw data using Auto Loader or manual batch processing.

- Store the raw data in Delta Lake format in the Bronze layer.

- Ensure raw data is immutable for auditing purposes.

df_bronze = spark.readStream \ .format(“cloudFiles”) \ .option(“cloudFiles.format”, “json”) \ .load(“/mnt/raw_data”) \ .withColumn(“ingestion_time”, current_timestamp()) df_bronze.writeStream \ .format(“delta”) \ .outputMode(“append”) \ .option(“checkpointLocation”, “/mnt/checkpoints/bronze”) \ .start(“/mnt/bronze_data”)

Implementing the Silver Layer in Databricks

- Objective: Clean and transform data for further analysis and reporting.

- Key Technologies: Delta Lake, Apache Spark.

- Steps to Implement:

- Read raw data from the Bronze layer.

- Apply transformations such as removing duplicates, handling null values, and standardizing formats.

- Store the cleaned data in Delta Lake format in the Silver layer.

- Enrich data by joining with lookup tables.

- Code Example:pythonCopy code

df_silver = spark.read \ .format("delta") \ .load("/mnt/bronze_data") df_silver_cleaned = df_silver.dropDuplicates(["id"]).na.fill({"column": "value"}) df_silver_cleaned.write \ .format("delta") \ .mode("overwrite") \ .save("/mnt/silver_data")

Implementing the Gold Layer in Databricks

- Objective: Aggregate and prepare data for business intelligence (BI) tools, reports, and machine learning.

- Key Technologies: Databricks, Delta Lake, Power BI, Tableau.

- Steps to Implement:

- Read transformed data from the Silver layer.

- Perform aggregations, such as calculating KPIs or summarizing data.

- Store aggregated data in Delta Lake format in the Gold layer.

- Integrate with BI tools for visualization and reporting.

- Code Example:pythonCopy code

df_gold = spark.read \ .format("delta") \ .load("/mnt/silver_data") df_aggregated = df_gold.groupBy("category").agg({"sales": "sum", "profit": "avg"}) df_aggregated.write \ .format("delta") \ .mode("overwrite") \ .save("/mnt/gold_data")

Use Cases for the Bronze, Silver, and Gold Architecture in Databricks

- Real-Time Analytics: How the architecture supports real-time data ingestion and processing.

- Data Warehousing: How the layered approach improves querying performance and data management in data warehouses.

- Machine Learning: Preparing high-quality data for machine learning models using the Gold layer.